This essay for Document’s Spring/Summer 2024 issue traverses arthouse cinema, Shein, and streaming to explore how the ‘technical image’ is changing the nature of meaning-making

We are living between two informational worlds: the one we think we know and the one that actually exists. The former lasted with relative stability for hundreds (and, by some measures, thousands) of years. It was organized by writing, truth, and fact. Most of us alive today are trained for that world, calibrated to it, but deep down we don’t fully believe in it anymore. Deep down, we can sense that the world we must navigate operates differently—and yet it’s unclear exactly how. The world we think we know is gone.

Example: I ask a 20-something New Yorker whose intuition I trust how she would describe her style and she tells me that she doesn’t really have one. It’s more about a way of searching networks, she explains, a shared logic among her friends of traversing online space that delivers her to certain objects, places, experiences. It doesn’t matter whether the item actually fits or looks good or if the location is conventionally significant—just that it existed along an interesting network pathway. The style is incidental.

Example: Global fast-fashion retailer Shein lists thousands of new items to its site every day. There are no “collections,” no “seasons,” no indication of a world in which a particular set of objects might make sense. At a glance, the garments and other goods seem random. Far from the practice of 20th-century retailers allocating massive budgets to build a specific fantasy in their customers’ minds (think: Abercrombie & Fitch tapping Bruce Weber and Slavoj Žižek for its substantial late-’90s/early-’00s

quarterly publication), Shein presents its goods as raw materials for the shopper to animate. More accurately, these objects are not “raw material” but—thanks to integrated software giving Shein’s manufacturers and designers near real-time insights into their prospective customers’ online activity—an emanation of what search terms and styles are receiving high traction. In a sense, what Shein makes is less “fashion” than tag clouds de-virtualized and remixed by human designers.

Take, for instance, a black sweatshirt listed on Shein that features the word “CHICAGO.” The city’s name is written in a blackletter font above a stylized print of the hands from Michelangelo’s fresco The Creation of Adam. The design also includes the text “It is the third largest city in the United States” (twice), as well as the date “1898” (large, in heavy saloon-style lettering). With no contextualization of what the garment is supposed to signal, it is the customer who gives this aggregation of signs meaning. Or maybe better said, it’s the customer who hallucinates meaning onto it based on subconscious associations triggered by certain qualities of the text, layout, and graphic design (e.g. blackletter script connoting Latino gang aesthetics, the potential subconscious misreading of “Chicago” as “Chicano,” Chicago being historically linked to gangsters and present-day drill music, the date suggesting some important historical event, the Michelangelo motif giving the design a kind of memetic gravity). In machine learning, generative AI models also “hallucinate,” creating seemingly correct but factually false output that claims to describe something in the real world.

In the case of this sweatshirt, however, what “true” meaning is there? Trying to decode artistic or political intent is senseless, as the design elements weren’t put together with any top-down message. Rather, they appear together because of their algorithmic proximity according to Shein’s data and were combined with the sole aim of garnering sales.

To be sure, cultures have been appropriating each other’s signs and redeploying them with new meanings for millennia, but the kind of pattern recognition that’s reflected in this Shein example feels novel—both on the side of the creator/manufacturer and that of the receiver/customer. Rather than functioning as the carrier of information or meaning, the “content” has become, instead, a conductor of energy, affect, “vibes.”

“What if, in a time of infinity-content, a meta reading of the shape and feel of content has become a survival skill?”

This makes sense given that the internet runs on human expression. Affect-rich content drives platform engagement and therefore data cultivation—data that is used locally to build out identities of individual users, as well as anonymized data that is sold (or externally scraped) in bulk for use by third parties such as Shein or programmatic ad brokers. The entire clearnet media space—all the publicly visible, indexable digital places you can imagine (social media but also legacy media sites, independent blogs, search engines, e-commerce, fitness apps, ridesharing apps, etc.)—is impacted, if not directly governed, by this business model that incentivizes users to create. In turn, content has become essentially infinite: In 2023, an average of 4 million Facebook posts were liked and 360,000 tweets were sent every single minute. On the scale of the individual, those are overwhelming numbers. But with more than 5.3 billion individuals now online, it’s helpful to keep in mind that the number 4 million is less than 0.1 percent of the number of people currently using the internet. The total volume of human expression online is certain to grow.

It’s a common gripe that no one really reads anymore. Users react to content without fully comprehending what the author is trying to convey, or comment and share before clicking the link. They take information (whether text- or image-based) out of context and editorialize it with unrelated narratives or even hallucinate associations into it that the author never intended. To be sure, this repurposing of content isn’t classic détournement (playful misreading or reframing to reveal a deeper cultural truth). It’s more compulsive, more desperate, more about siphoning attention from higher-visibility sites. On the one hand, this downstream assigning of meaning can lead to chaos (see: Balenciagagate). But what if, for better or worse, this non-reading mode is a form of adaptation: an evolutionary step in which we’ve learned to scan, like machines, for keywords and other attributes that allow for data-chunking, quickly aligning a piece of content with this or that larger theme or political persuasion? What if, in a time of infinity-content, a meta reading of the shape and feel of content has become a survival skill? The ability to intuit a viable meaning via surface-level qualities—ones that are neither text nor image but a secret third thing—is now essential for negotiating our sprawling information space. Perhaps we’re tapping into a more primal human intelligence.

But it’s not only a matter of us “not reading.” Content itself (whether breaking news, academic paper, open letter, or personal tell-all) no longer primarily functions as a vehicle for transmitting information from authority to audience, or even peer to peer. Per the contract of the platform economy, the job of content in algorithmically determined spaces is to conduct the attention, if not also behavior, of a network. In turn, factuality, originality, and style matter less than where and how content circulates and what kind of meaning its recipients can read (or hallucinate) into it. Cue the Shein model.

The combination of these factors—an infinite availability of content, our adaptation of sensing more than reading, and content itself being more of a catalyst than an end product—has also changed where the creative act now happens. Our first example of the young New Yorker who thinks of fashion in terms of paths through networks rather than codified “looks” is germane here. Creative agency is now located either further upstream in programming platforms (i.e. setting the parameters for what content can be and how we engage with it) and/or further downstream via receiving users (for example, creative directors with enough clout and visibility to aggregate content, and therefore networks, into coherent cultural statements). This is the reason figures such as Virgil Abloh, Anne Imhof, Demna, and Ye have been among the most influential artists and cultural creators of the past decade—less for what they originated than what they distilled and repositioned in the stream.

In this new world, where content functions more to conduct audiences than to transmit concrete information, the substance of TV and film is changing, too. In the New Models Discord server, filmmaker Theo Anthony (All Light, Everywhere [2021]) recently used the term “mood-board cinema” to describe shows that hinge less on storytelling or character development than, in his analysis, viewers’ “pre-existing attraction to a scattershot tag cloud of references in the absence of narrative.” With 2.7 million unique titles across all platforms (streaming and linear) currently available to US viewers, TV creators are also optimizing for algorithmic visibility. Maybe this is why a lot of streaming content now feels so flat. It’s sort of like the minimum viable version of dining one finds at the airport: its surface qualities and staging are convincing enough to drive the sale, but the contents feel simulated, approximated, even beside the point.

Night Country (season four of HBO’s True Detective franchise) is an example of this. Set north of the Arctic Circle during the continuous darkness of late December, it features Jodie Foster and John Hawkes, uses Billie Eilish’s “Bury a Friend” for its title sequence track, and piggybacks on the stellar reputation of the show’s first season. But to watch Night Country is to experience a Shepard tone of plot advancement (any semblance of continuous narrative is there only to hold attention across episodes), with dialogue so insipid you wish they’d used GPT to help with the writing (it includes quotable lines of the caliber of “Some questions just don’t have answers.”) and a reliance on cinematic tropes and political clichés that hint at pressing issues but fail to deliver anything deeper (detectives going rogue to get the job done, a portrayal of systemic violence toward an Indigenous population, cops abusing power except these cops are female…). Yet Night Country is the most-watched of all True Detective seasons, so HBO has renewed the series for another round. Anthony attributed the show’s success to its abundance of hightraction tags (“noir, horror, John Carpenter, Indigenous rights”), noting that while it “contains ‘hot’ references and ‘hot’ issues,” it “relies on the fact that they’re already hot, rather than risk[ing] handling them in any way.” This allows the creators to “appeal to the most, offend the least, and continue to optimize streamer metrics.”

The only actually eerie thing about True Detective: Night Country is realizing the degree to which the visibility mechanics of tech platforms are mid-ifying what this new era of content-as-catalyst could be. Meanwhile, there has been a surge of discussion around the ethical and aesthetic implications of artificial intelligence on the culture sector as generative machine learning models such as OpenAI’s DALL-E and Stability AI’s Stable Diffusion gain mainstream adoption. You know the subject headers already: How will AI change the role of the artist? What will it do to authorship and originality? How will it avoid reinforcing bias? How will it change our conception of real and unreal, fiction and fact? These concerns are valid, as machine learning models will certainly be an accelerant for all. But what if we thought about generative AI more as an expression of an epochal shift in human communication than a root cause? What if there’s something deeper, older, even organically emergent that has been nudging us to perceive and communicate in a fundamentally different way and “machine learning neural networks” are, rather, answering that call?

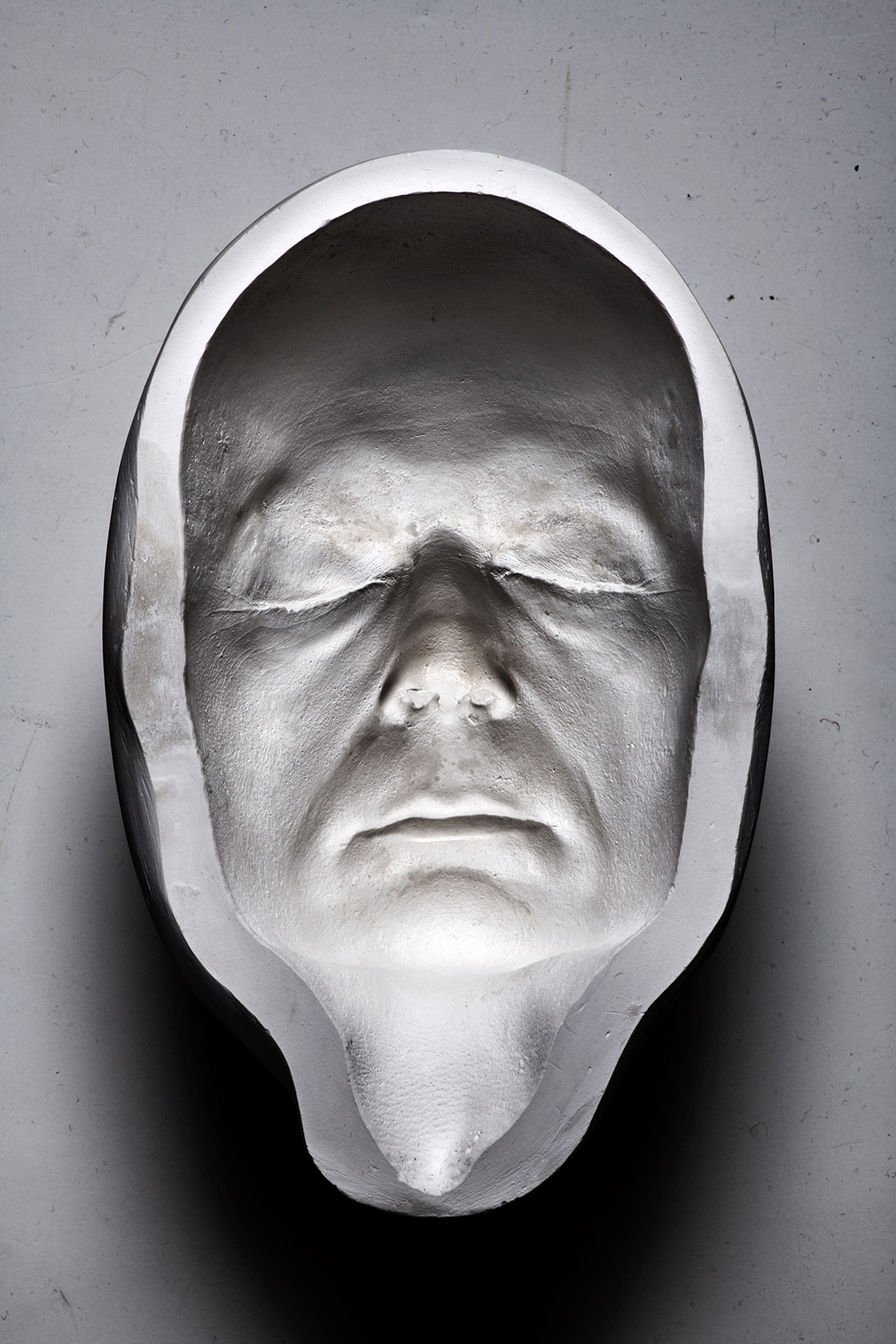

A half-century ago, media theorist Vilém Flusser noticed something profound happening to human communication. After thousands of years of compressing experience and knowledge into lines of written language (e.g. imagine all of the complexity of, say, the fall of Rome, simplified into unidirectional sequences of text), we were using new technologically-assisted media (photographs, video, computer graphics, etc.) to store and transmit experience and knowledge non-linearly. While this added dimension, it also introduced more abstraction. For instance, it would have been hard for American civilians to distinguish between the representations of the Vietnam War being live-broadcast into their living rooms and the out-of-frame reality on the ground. Flusser classified this kind of media as “technical images,” which compress and unpack information using digital abstraction, computation, and/or simulation.

In media theory, humans are distinguished from other living beings by the ability to communicate across time and space, storing and transmitting information beyond the lifespan of an individual. Early humans accomplished this by compressing knowledge into drawings, which they animated with stories and rituals to be passed down through generations. But images can be easily overwhelmed by competing interpretations, and when writing emerged, it allowed images to be inscribed with “correct” or “original” meanings. We can think of this, as Flusser did, as the translation of embodied knowledge into alphabetic code. This allowed infinite complexity to be reduced to a format that could be easily shared, uniformly transcribed, systematically archived, and readily redistributed. The technology of writing was so fundamental (to power, to civilization’s stability) that it became the basis of developing and recording human knowledge. Yes, other media existed in parallel, but writing was dominant: Every major religion has a “great book”; every nation, a written declaration of its foundation; every citizen, identity cards and papers. For millennia, writing organized society, mediating knowledge and power. Being cumulative, writing also encouraged historical consciousness and established our linear sense of time. We calibrated ourselves to this system, using it as the basis for science and law and philosophy and commerce. While the cost of reducing reality to alphabetic code was the loss of detail, dimension, and alternative (often subaltern) perspectives, it was nevertheless a system of abstraction that gained universal adoption and one to which we adapted on a species level. Essentially, we coevolved with text and, for a long time, believed in its inalienable capacity to communicate—in the beginning there was the word, etc.—and we therefore became textual humans.

But we textual humans, so Flusser argues, are now living in a world organized not by text but by these technical images, which is to say media that compresses reality via processes we do not fully understand. According to this idea, we now predominantly transmit information in ways that exceed the limits of alphabetic code, and we gained this ability so rapidly that we are civilizationally unprepared to absorb the shift. Media has become “pseudo-magical,” Flusser wrote in 1978, amid a culture increasingly defined by TV with the potential of computer-aided cybernetic media coming into view. “The climate is curious because the symbols are incomprehensible even if we produce them.” It was suddenly as if signs no longer had specific denotative meanings; they had ambient powers.

“The internet itself now senses us, re-encoding all content that flows through it, processing every product launch, policy change, and TV series, as well as our every utterance in response into mathematically precise, high-dimensional representations.”

Perhaps this is in part why trust in academic and cultural institutions has waned in recent decades. Their authority is rooted in denotative systems of information transfer, as was possible when we still believed in text. But as connotative forms of communication (meaning determined through associations and affect) overtook the denotative function of text, institutions have struggled to adapt. Critically, communicating by scanning and sensing overrides the longstanding cultural and class divisions imposed by text-based literacy, which is at once incredibly exciting but also a key to why democratic processes (US and EU governance, not least) now feel like such a sham. As the political scientist Kevin Munger (Generation Gap: Why the Baby Boomers Still Dominate American Politics and Culture) writes, “Enough people need to be able to talk with each other—and specifically to write to and read each other—to enable the deliberation upon which liberal reason is premised.” We are still programmed to read signs directly, to attempt a denotative decoding, but there are just too many applications and interpretations of any given sign or data point to enact actual democratic deliberation. So instead we sense, basing our decisions on the shape and feel of the content and squaring that with whatever meaning feels closest (and therefore “truest”) to our personal experience.

Looking to the future, maybe all of this human sensing will, for better or worse, be channeled into a new kind of governance. One could imagine, say, a machine-learning-assisted process more accurately reflecting the needs and intentions of Americans than the nation’s electoral college (which elects the US president based on state-by-state majorities of tallied votes). This would be possible because the internet itself now senses us, re-encoding all content that flows through it, processing every product launch, policy change, and TV series, as well as our every utterance in response into mathematically precise, high-dimensional representations. That meta-reading survival skill mentioned earlier (i.e. communicating via a secret third thing that’s neither text nor image)? Machine learning engages in something analogous. Unlike text, this form of compression is fundamentally nonlinear. Yet unlike images, it encodes a meaning that is nevertheless definite and calculable. Loosely speaking, machine learning reads the shape and feel of a thing and assigns it a position in relation to N-number of other things, like a hyper-specific kind of connotative thinking. This isn’t exactly the same as human cognition, but it nevertheless tracks with a fundamental change in the way we process information. Moreover, it’s a class of media—what K Allado-McDowell has theorized as “neural media”—that communicates back. In doing so, it nudges us to speak in connotative metatext, as if we know the machine is sensing us, so we reflexively seek to become machine-legible, perhaps even machine-like. After all, it is through the machine, through its processes of embedding, that we are now legible to each other across time and space. (Think of Google Search or, more critically, AI-powered chatbots such as OpenAI’s ChatGPT or Microsoft Bing’s Copilot, which increasingly serve as research aids. The latter was invaluable in writing this piece.)

Film01: Histoire(s) de l’internet by the artist/internet presence angelicism01 is a 2023 cinematic work composed entirely of other people’s footage—some submitted, most scraped from the internet—that tells a story of imminent extinction and an infinite drive to transmit oneself through the network. Across 3 hours, the screen pulses with “content.” There are sequences of sunsets, rainbows, and crepuscular god rays—so many and in such rapid succession that these extraordinary moments merge into the generalized platonic essences of “sunset,” “rainbow,” and “blessed light.” Then we see a POV video of Japanese schoolgirls doing parkour and repeatedly leaping to their seeming deaths (actually a 2014 viral stunt promoting Suntory’s C.C. Lemon drink). Next are TikTok montages, sad girls dancing in their rooms, people talking about Film01 itself blended with K-Pop performances, the voice of Jean-Luc Godard speaking about iPhones. Cut to CNN footage of the arraignment of Donald Trump, a young hand in a fingerless glove flipping through Heidegger’s Being and Time. The sound is layered, the source of each channel (and to what layer of mediation it corresponds) often unclear…

I want to go to the internet in heaven with @usergirl9999 / angel rising goth moon playing Elden Ring in offline mode / do not expect to see 20471120 in a video game piece today… [On screen: a totaled car and a view of someone’s phone playing an Eartheater track on Spotify, then various screen recordings, phones recording screens containing texts, maybe Wikipedia, a Wikipedia page for the French composer Olivier Messiaen who wrote his elegiac Le Quatuor pour la fin du temps in 1940 while interned in a German concentration camp.] …this summer was tragic / mumblecore it-girl searching obscure terms in 4chan archive and gaining access to secret knowledge / mumblecore it-girls just go blah, blah, blah / angelicism is a serial killer or something, I don’t even know… [cut to black; then clips rapid-firing as if rounds of an automatic weapon, before slightly longer clips of young women filming themselves dancing, walking, just being; an overlay of the Twin Towers on fire…]

There is so much “content” in Film01, it’s as if the entire internet—or, at least, the affective fringe frequented by the extremely online—were uploaded and recomposed with machine vision. To describe each segment feels akin to describing a piece of Shein streetwear: the elements are often inscrutable, their juxtapositions often inappropriate (Messiaen plus mumblecore and it-girls). And yet a kind of meta-informational layer nevertheless comes through. In fact, when viewing Film01, just as when shopping Shein, scrolling TikTok, or scanning world news, one automatically reads past the “content” to the metatext. This kind of reception and production of meta-content has long been a key creative skill, but it’s increasingly an automatic life skill for basic communication. Everyone and their cousin thinks in memes now and/or is able to quickly ascertain the essence of a piece of content through the content’s immediate connotative properties. And yet, we still think we think in facts. We still think we think and comprehend according to the denotative terms of text-based knowledge. “Mumblecore it-girl searching obscure terms in 4chan archive and gaining access to secret knowledge”—the voiceover is at once literally incoherent yet conveys something still (a mood, a vibe, a milieu). Perhaps this is the elusive voice of Flusser’s “technical imagination” coming through.

The questions are now: How do we know what we know? How do we know the shape and feel of our own networks and whether they’ll be at all legible to those traversing them in the future? What happens to our world when our space of communication—the space of intergenerational knowledge transfer—is determined not by writing, truth, and fact but by intuition, proximity, and hallucination? It means, I think, that we are newly free to renegotiate the metaphysical parameters of our reality. That may sound like hyperbole, but when we consider the degree to which the technology of writing contributed to our linear understanding of time, why wouldn’t this age of neural media enable us to experience time differently? Why wouldn’t it enable us to experience a new level of “the real”? Perhaps it already has.